It will display instructions on how to obtain the access token, and will ask you to execute the following command. !git clone Įxecute the following command to see the initial setup instructions. Execute the following commands one by one.

I’ve modified the original code so that it can add the Dropbox access token from the notebook. Open Google Colab and start a new notebook.Ĭlone this GitHub repository. tar -cvf dataset.tar ~/DatasetĪlternatively, you could use WinRar or 7zip, whatever is more convenient for you. The code snippet below shows how to convert a folder named “Dataset” in the home directory to a “dataset.tar” file, from your Linux terminal. One possible method of archiving is to convert the folder containing your dataset into a ‘.tar’ file.

Google colab get file path archive#

Therefore, I recommend that you archive your dataset first. Uploading a large number of images (or files) individually will take a very long time, since Dropbox (or Google Drive) has to individually assign IDs and attributes to every image. You can also follow the same steps for other notebook services, such as Paperspace Gradient. Transferring via Dropbox is relatively easier. This sets an upper limit on the amount of data that you can transfer at any moment.

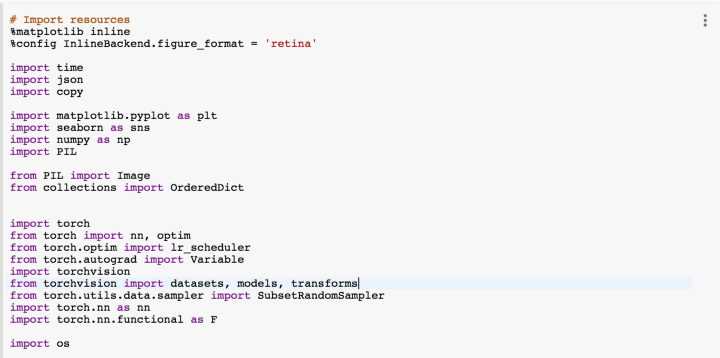

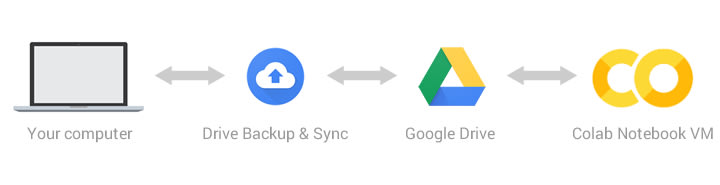

Dropboxĭropbox offers upto 2GB free storage space per account. The most efficient method to transfer large files is to use a cloud storage system such as Dropbox or Google Drive. Some of the methods can be extended to other remote Jupyter notebook services, like Paperspace Gradient. I’ve also included additional methods that can useful for transferring smaller files with less effort. This blog compiles some of the methods that I’ve found useful for uploading and downloading large files from your local system to Google Colab. But you might have become exasperated because of the complexity involved in transferring large datasets. If you have heard about it, chances are that you gave it shot. If you haven’t heard about it, Google Colab is a platform that is widely used for testing out ML prototypes on its free K80 GPU. By Bharath Raj How to Upload large files to Google Colab and remote Jupyter notebooks Photo by Thomas Kelley on Unsplash

0 kommentar(er)

0 kommentar(er)